(3/25_Updated_footage version)

It’s a project of material experiment and mycelium network simulation. The ultimate goal is to pull closer humans’ relationship with fungus, increase awareness, and explore the usage of mycelium by holding workshop and gathering public source.

material experiment

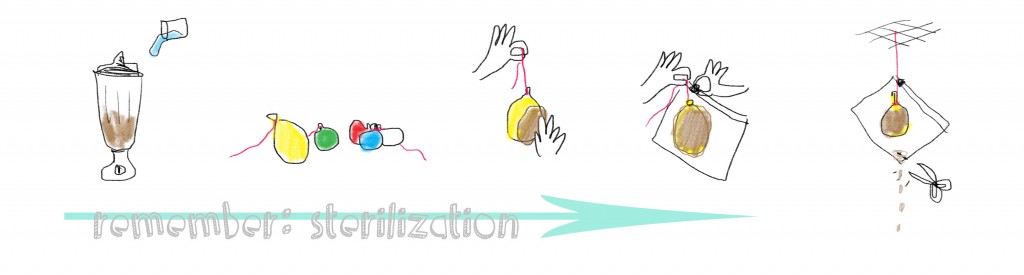

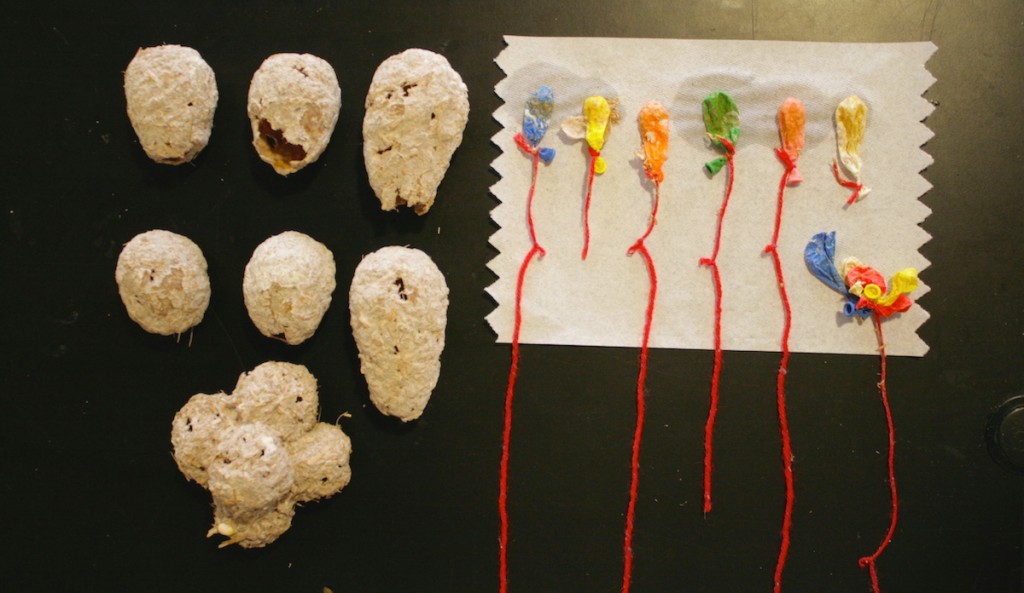

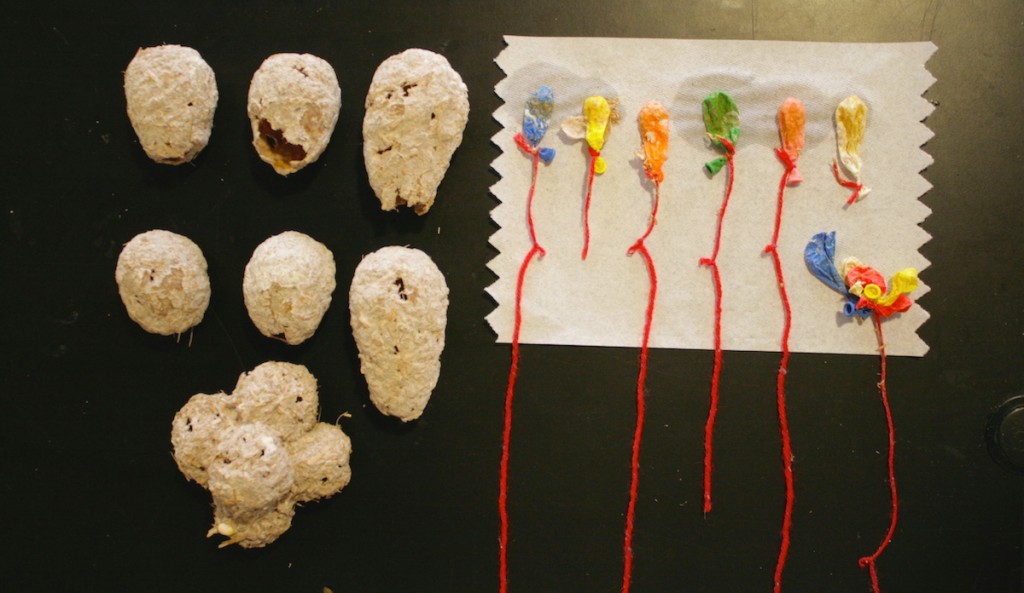

In 2007, Eben Bayer and Gavin McIntyre noticed mycelium’s self-assembling glue-like character. By growing mycelium with agricultural byproducts, they discovered a biological, durable, and compostable material that performs, and they found a company called ecovatice. Their products are pressed with pressure during production, and are thick, chunky, and volumetric. Inspired by artist Eric Klarenbeek‘s 3D printed case with straw, I guessed as long as I follow the principle that “mycelium digests nutrient and water and grows harder”, the process of production can be free-formed and without boundary. So I gave it a try.

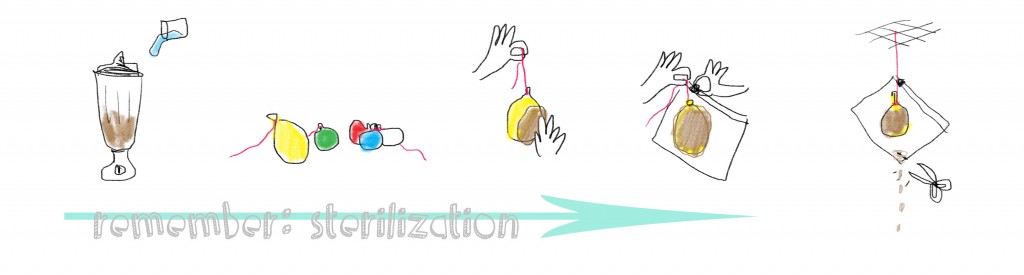

For the blender part, the ratio of mycelium+straw & water is approximately 2:1.

Hang the balls in a separated area in order to avoid be contaminated. And after 3~5 days the ball will become obvious white, showing the growing of mycelium.

After 10 days, harvest the balls and pop the balloons, and voila!

Put them aside and dry their interiors for a day(because they were blocked by the balloon).

mycelium network

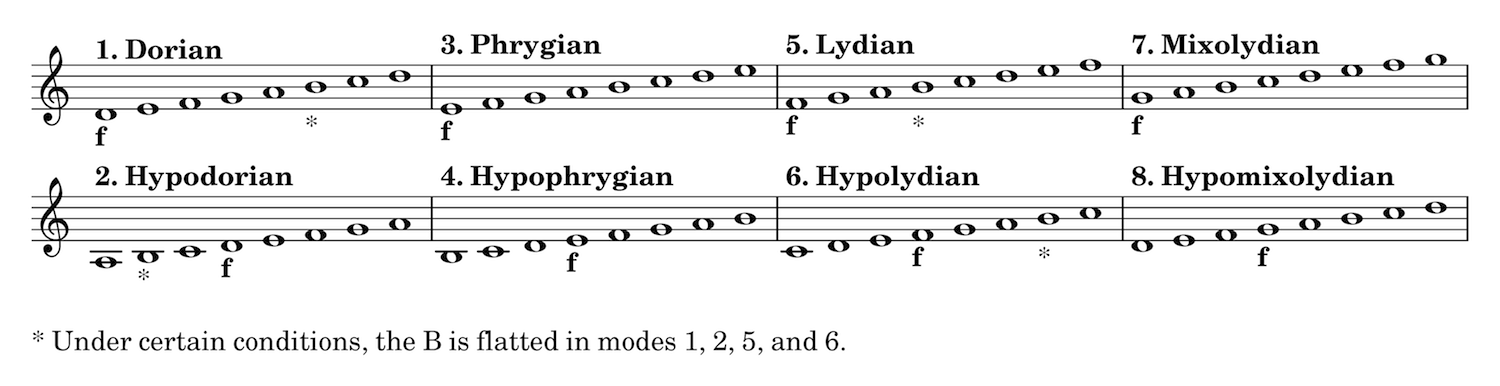

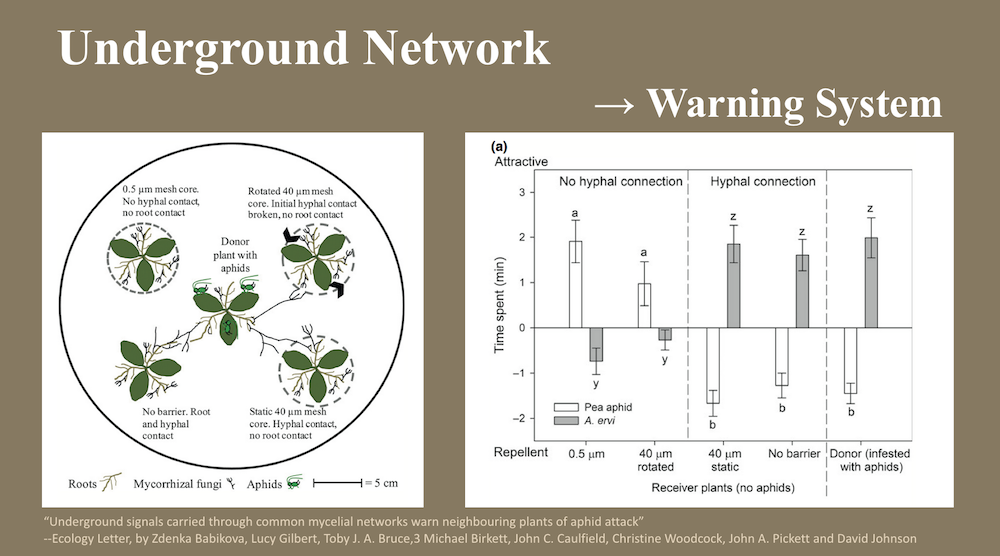

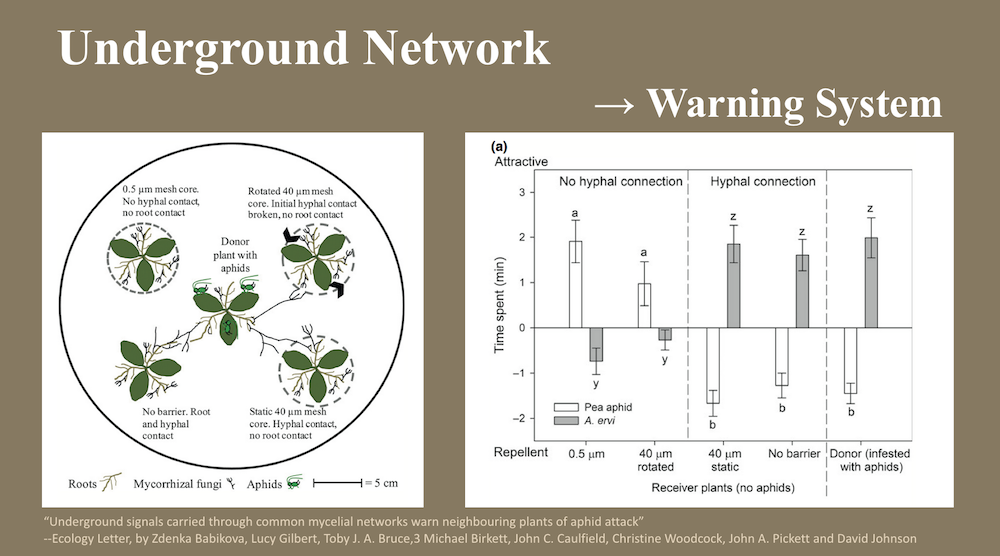

I’m also interested in how mycelium communicates with each others. The roots of most land plants are colonised by mycorrhizal fungi that provide mineral nutrients in exchange for carbon, and based on “Underground signals carried through common mycelial networks warn neighbouring plants of aphid attack” on Ecology Letter, by Zdenka Babikova, Lucy Gilbert, Toby J. A. Bruce,3 Michael Birkett, John C. Caulfield, Christine Woodcock, John A. Pickett and David Johnson, mycorrhizal mycelia can also act as a conduit for signaling between plants, acting as an early warning system for herbivore attack.

The experiment is based on the fact that Vicia Faba will emit plant volatiles, particularly methyl salicylate, making this bean plants repellent to aphids but attractive to aphid(bugs) enemies such as parasitoids. It sets up 5 Vicia Faba, having only one of them attacked by aphids, and having it either connected to other plants with roots or without roots, with mycelium or without mycelium(as picture right above). And the result(as picture left above) shows that the plants, which are connected to the Donor(infested w/ aphids) by mycelium, act same as the Donor, producing volatiles to repel aphids and attract aphids’ enemy. It means This underground messaging system allows neighboring plants to invoke herbivore defenses before attack.

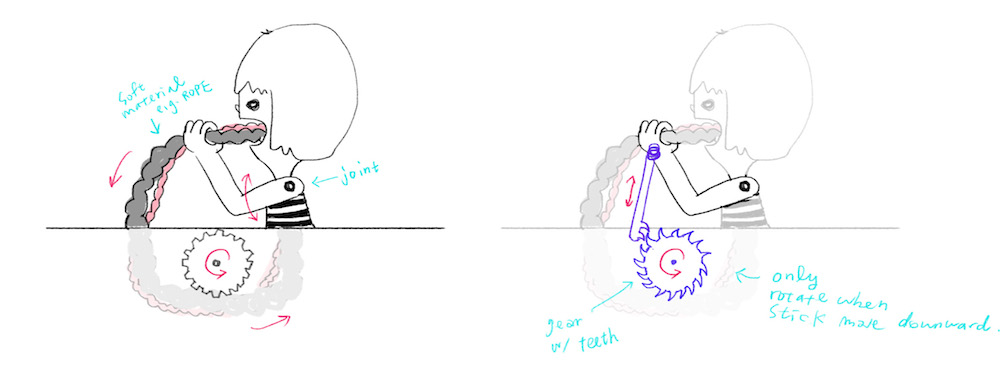

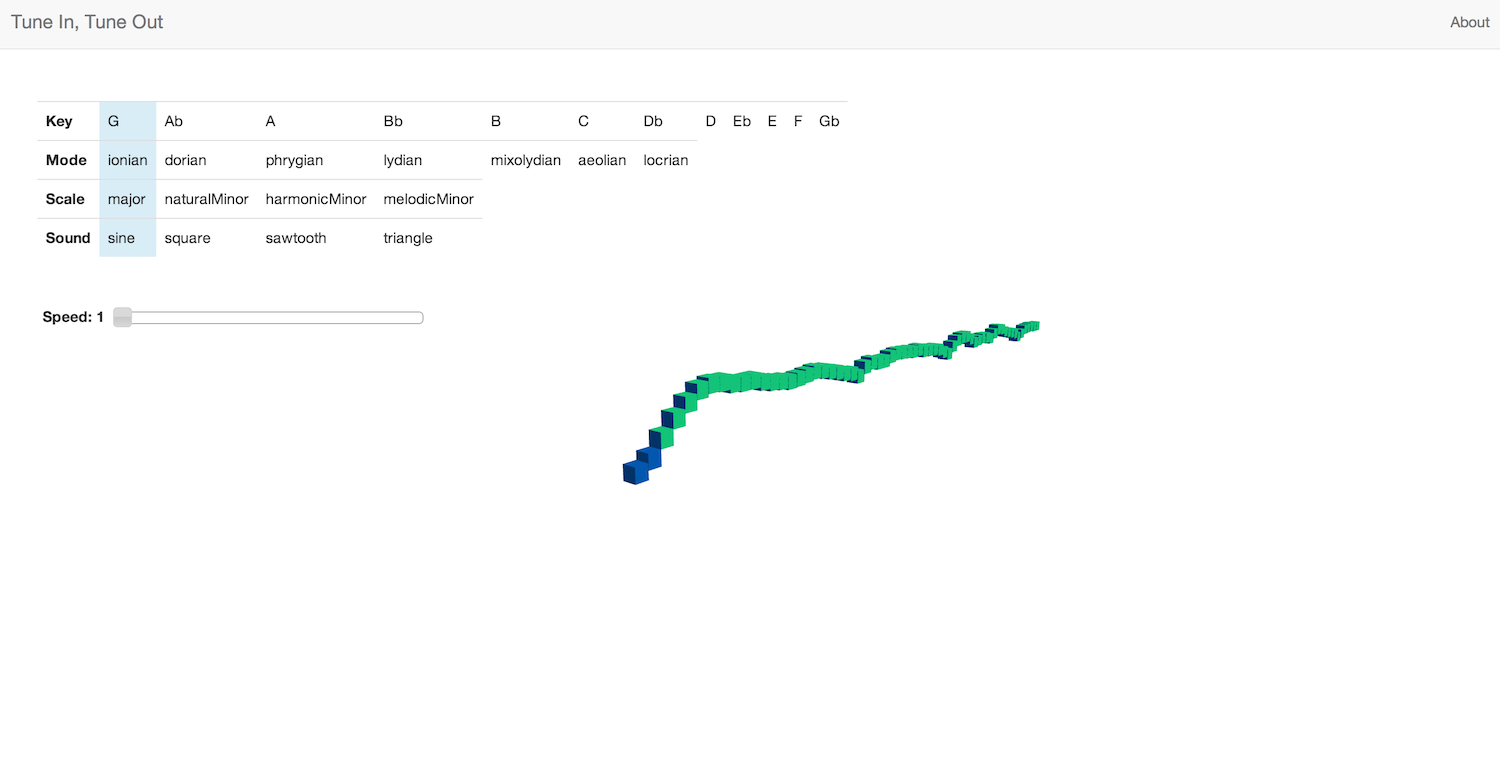

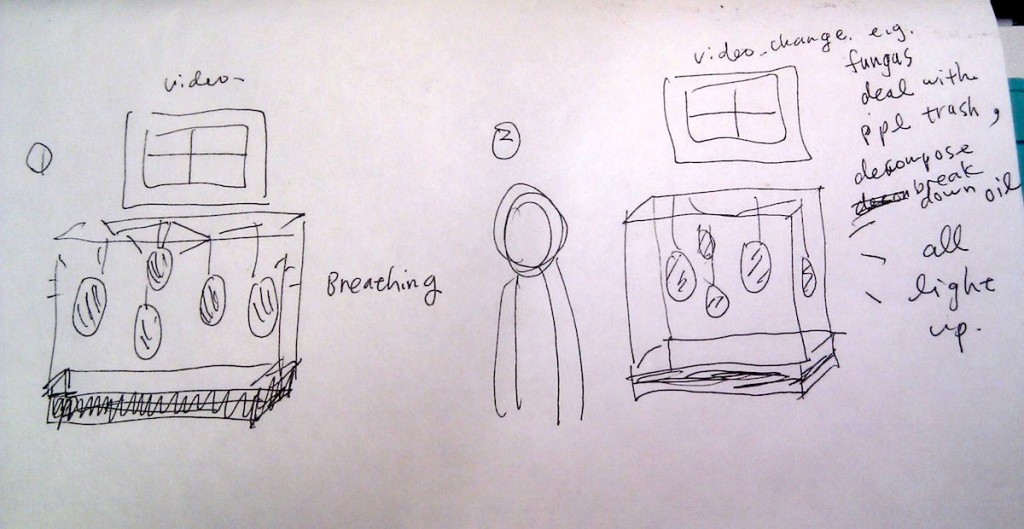

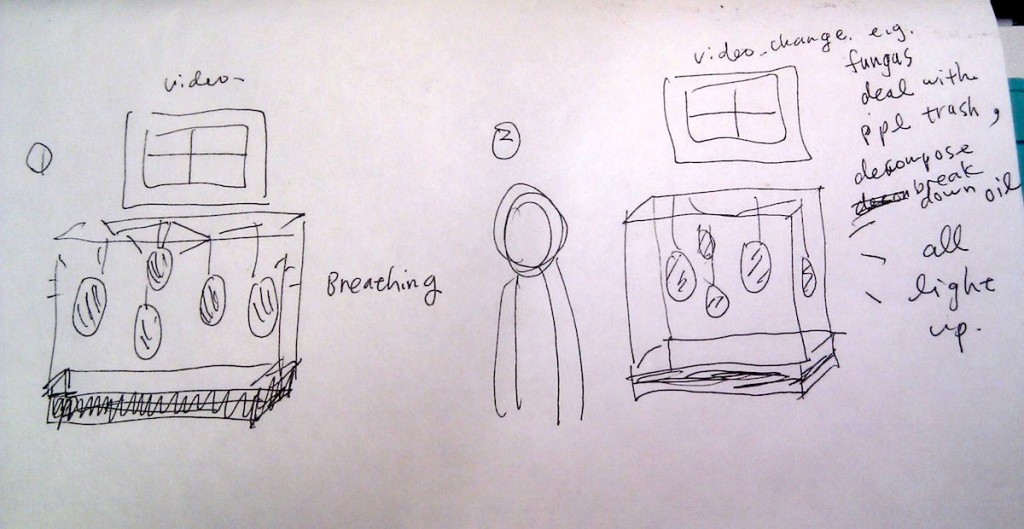

It interests me a lot, and I want to use it as the content to inform people about the amazing behavior of fungus by visualizing the network of mycelium. The idea is–>

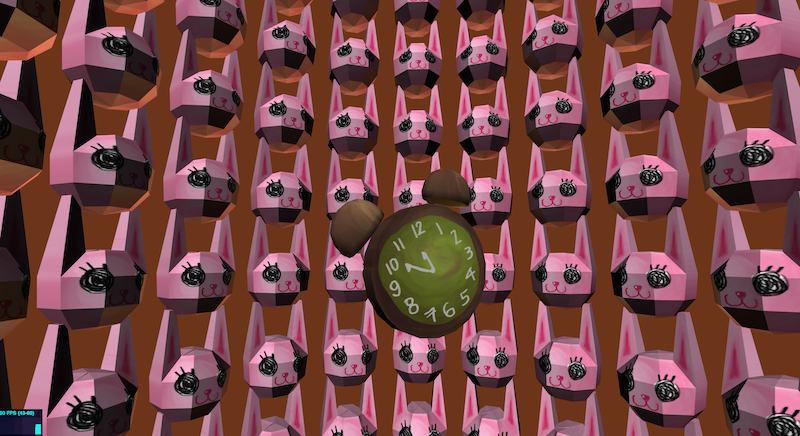

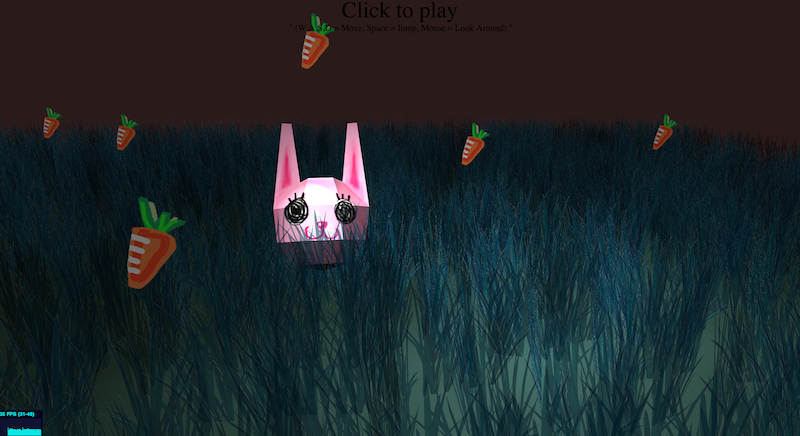

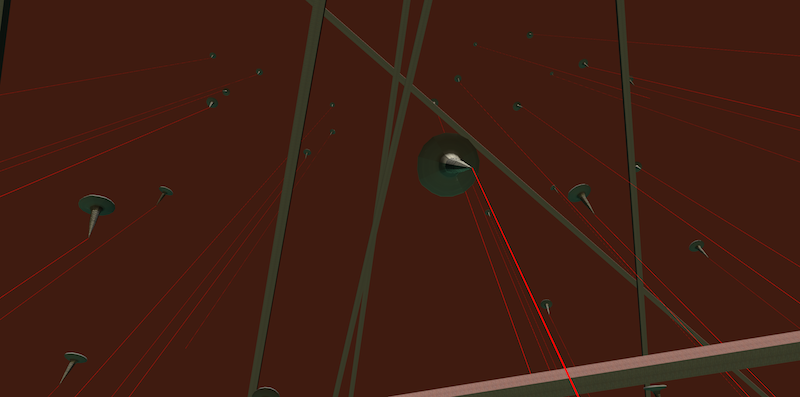

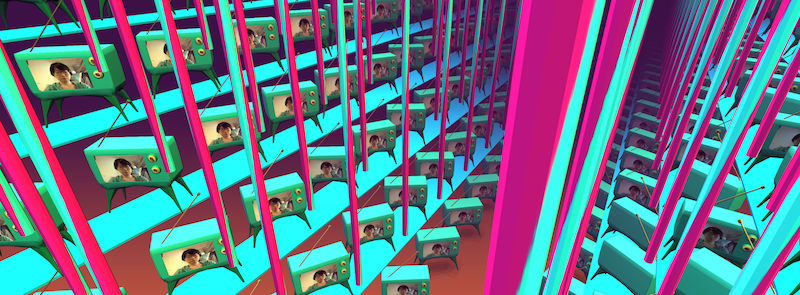

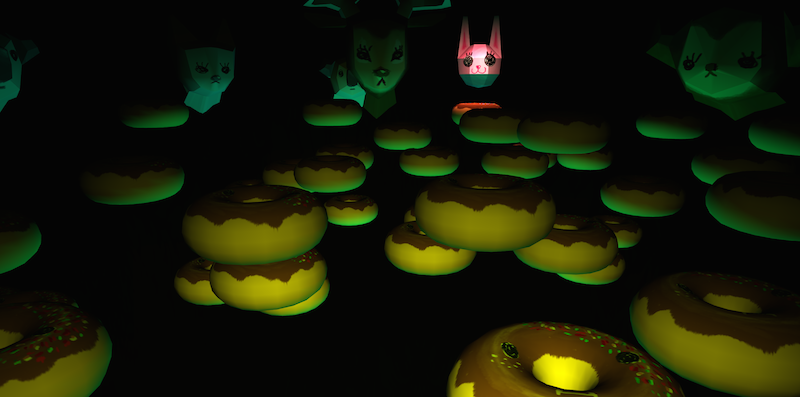

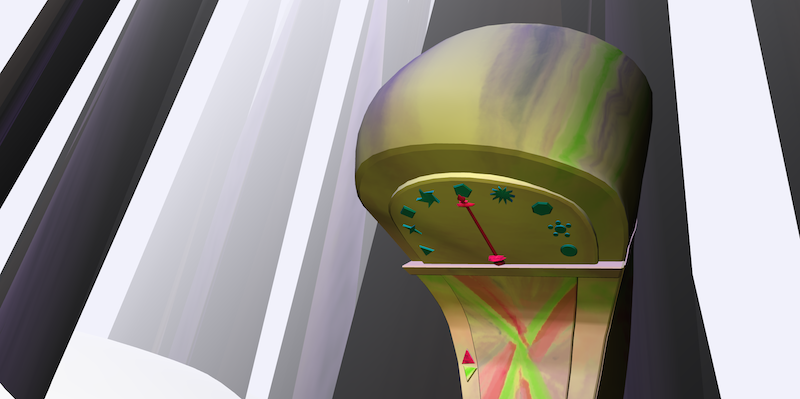

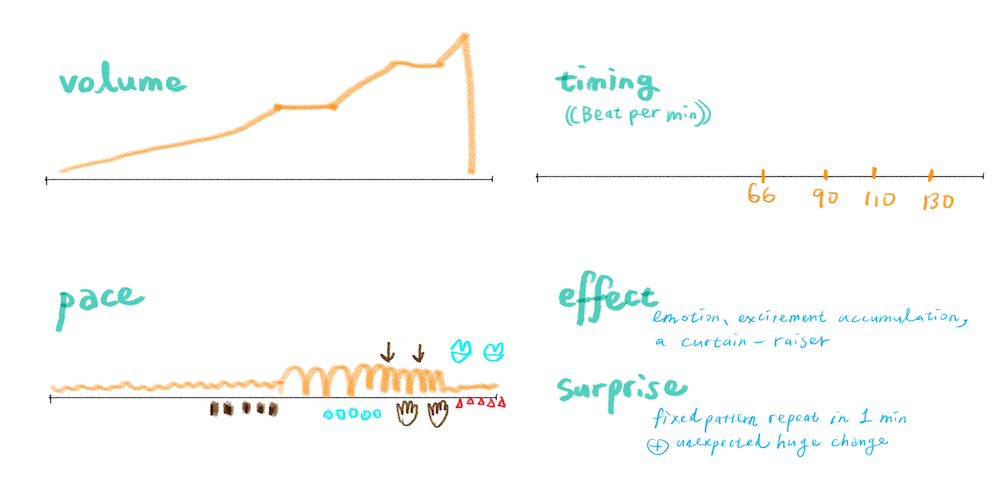

- when there’s no one around, the mycelium bulb will breathe in its own pattern, presenting w/ LEDs, and there is a video playing footages of fungus & mycelium life.

- once someone comes near, the mycelium bulbs will communicate with each other, lighting up and off one by one, and the video will change to broadcast the human-related footages(e.g. garbage, oil spill, and mycoremediation).

footage Breathing, password: fungus

footage Aware, password: fungus

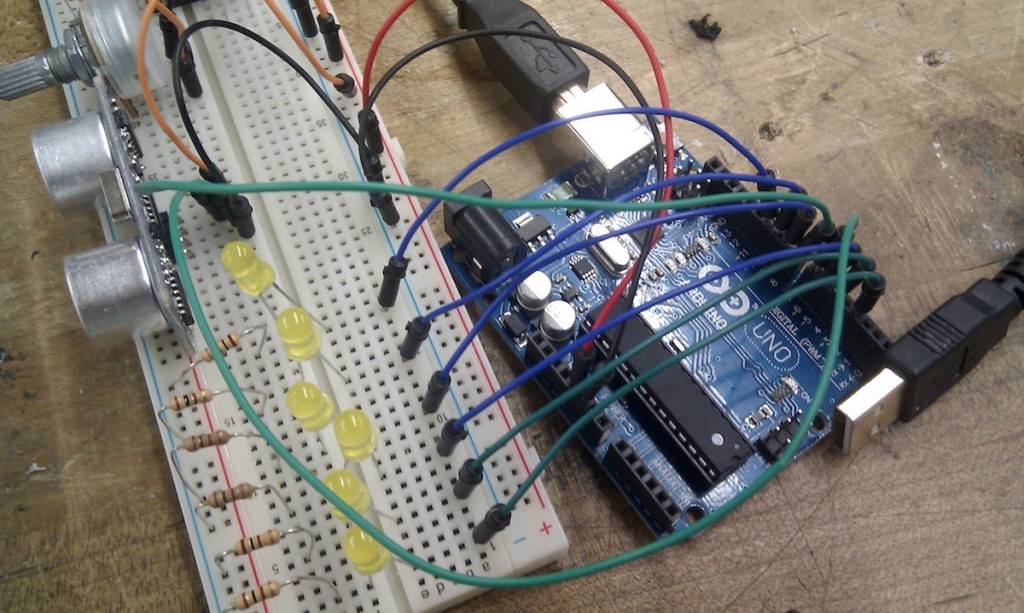

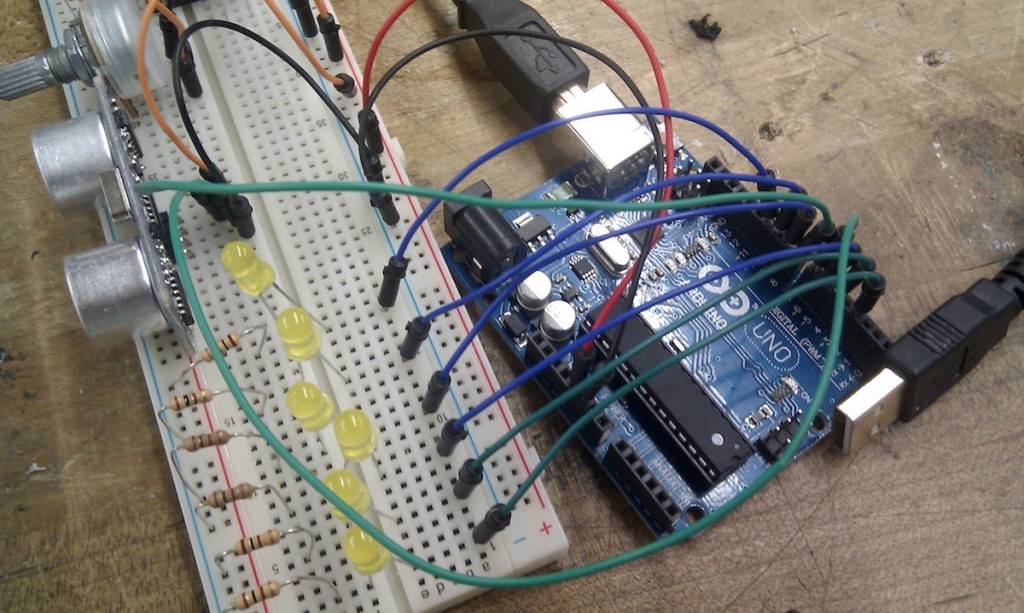

And here are my Arduino code. I wrote digitalWrite into PMW pins.

//#include <LED.h>

#include <NewPing.h>

#define TRIGGER_PIN 8

#define ECHO_PIN 7

#define MAX_DISTANCE 30

//for ultrasonic sensor

NewPing sonar(TRIGGER_PIN, ECHO_PIN, MAX_DISTANCE);

int value;

int interval; //to trigger the change of LEDs

//for smoothing

const int numReadings = 5;

int readings[numReadings];

int oriReading;

int index = 0;

int total = 0;

int average = 0;

//pin

int ledPins[] = {

3,5,6,9,10,11 };

int lastFade[6] = {

0};

int level[] = {

10, 23, 45, 50, 100, 205};

//output

int maxV = 220;

int minV = 5;

//slope & intercept

double ain[6], bin[6], aex[6], bex[6];

//time

double inTime[] = {

1500, 1700, 1900, 2000, 2100, 2300};

double pauseTime[] = {

350, 400, 450, 500, 550, 600};

double outTime[] = {

2000, 2200, 2400, 2500, 2600, 2800};

double thirdT[6], cycleT[6];

double levels[6];

boolean lightUp[6];

int awareTime[] = {

0, 1, 2, 3, 4, 5};

int awareOriTime[] = {

0, 1, 2, 3, 4, 5};

void setup() {

Serial.begin(9600);

//smoothing

for(int i=0; i<numReadings; i++){

readings[i] = 0;

}

for(int i=0; i<6; i++) {

pinMode(ledPins[i], OUTPUT);

thirdT[i] = inTime[i] + pauseTime[i];

cycleT[i] = inTime[i] + pauseTime[i] + outTime[i];

ain[i] = (maxV - minV)/inTime[i];

bin[i] = minV;

aex[i] = (minV - maxV)/outTime[i];

bex[i] = maxV - aex[i]*(inTime[i]+pauseTime[i]);

lightUp[i] = false;

}

}

unsigned long tstart[6];

double time;

void loop() {

//ultrasonic sensor

oriReading = sonar.ping();

value = (int) oriReading/US_ROUNDTRIP_CM;

for(int thisChannel=0; thisChannel<6; thisChannel++) {

//if detect ppl, all light up

if(value > 0) {

//if time can be dividable by 60

if ( (awareTime[thisChannel])%6 == 0 ) {

lightUp[thisChannel] = !lightUp[thisChannel];

}

if(lightUp[thisChannel] == true)

levels[thisChannel] = 255;

else

levels[thisChannel] = 0;

analogWrite(ledPins[thisChannel], levels[thisChannel]);

//determin whether to restart the cycle of time

awareTime[thisChannel] += 1;

if( awareTime[thisChannel] >= (180) )

awareTime[thisChannel] = awareOriTime[0];

}

//if not, do LED pattern

else {

if (lastFade[thisChannel] <= inTime[thisChannel]) {

levels[thisChannel] = int( ain[thisChannel]*lastFade[thisChannel] + bin[thisChannel] );

}

else if (lastFade[thisChannel] <= thirdT[thisChannel]) {

levels[thisChannel] = maxV;

}

else {

levels[thisChannel] = int( aex[thisChannel]*lastFade[thisChannel] + bex[thisChannel] );

}

analogWrite(ledPins[thisChannel], levels[thisChannel]);

delay(1);

//determin whether to restart the cycle of time

if(lastFade[thisChannel] >= cycleT[thisChannel]) {

lastFade[thisChannel] = 0;

tstart[thisChannel] = millis();

}

else {

lastFade[thisChannel] = millis() - tstart[thisChannel];

}

}

}

}

And my Processing code to switch footages based on the Serial signal got from Arduino.

import processing.serial.*;

import processing.video.*;

import java.awt.MouseInfo;

import java.util.Arrays;

import java.util.Collections;

import java.awt.Rectangle;

Movie aware;

Movie grow;

boolean playGrow = true;

Serial myPort;

void setup() {

size(displayWidth, displayHeight);

if (frame != null) {

frame.setResizable(true);

}

background(0);

// Load and play the video in a loop

aware = new Movie(this, "aware_2.mp4");

grow = new Movie(this, "grow_v2.mp4");

aware.loop();

grow.loop();

// println(Serial.list());

String portName = Serial.list()[5];

myPort = new Serial(this, portName, 9600);

}

void movieEvent(Movie m) {

m.read();

}

void draw() {

if(playGrow)

image(grow, 0, 0, width, height);

else

image(aware, 0, 0, width, height);

}

void serialEvent (Serial myPort) {

int inByte = myPort.read();

println(inByte);

if (inByte > 10)

playGrow = false;

else

playGrow = true;

}

void keyPressed() {

if(key == '1')

playGrow = true;

if(key == '2')

playGrow = false;

}

int mX;

int mY;

boolean sketchFullScreen() {

return true;

}

void mouseDragged() {

frame.setLocation(

MouseInfo.getPointerInfo().getLocation().x-mX,

MouseInfo.getPointerInfo().getLocation().y-mY);

}

public void init() {

frame.removeNotify();

frame.setUndecorated(true);

frame.addNotify();

super.init();

}

photos of Fabrication

For further development, I’m thinking about maybe cooperate with Kate‘s “mushroom craft” and have some craft workshops! After all the process of making those mycelium light bulbs, I’ve been through the fabrication works which I’ve never tried before, and it felt great! I think direct “Hand” touch is the most effective way to pull closer the relationship between people and materials.

By starting the production from searching and growing the material, we can appreciate more about the resource we take from nature and also be more aware about the environmental issues. Not just sitting there receiving the news from TV, but actually caring and being aware of it because you feel it affecting the fabrication process directly. Maker/Crafter spirit is one of the answer to the future.