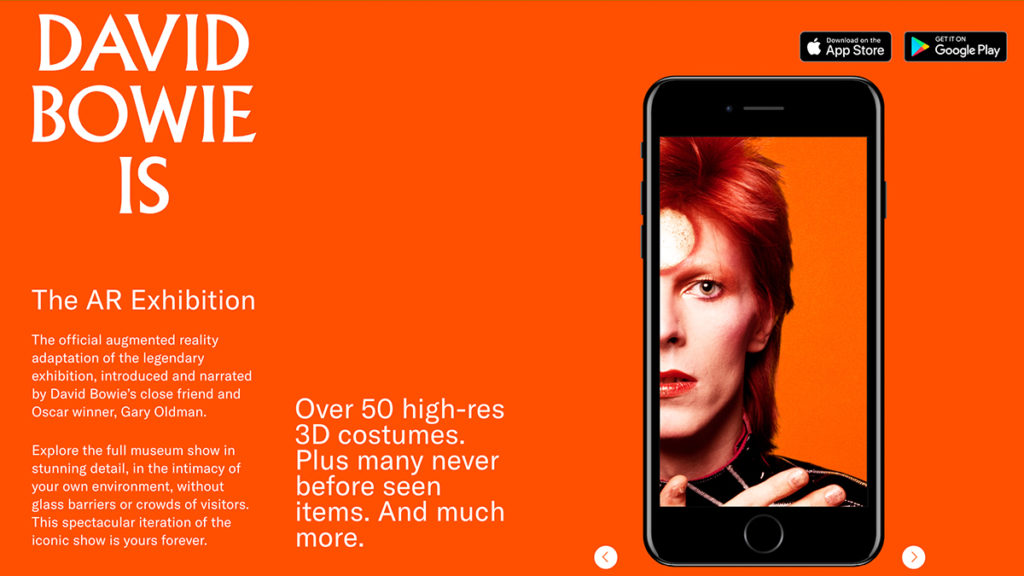

David Bowie Is – AR Exhibition

David Bowie Is – AR Exhibition, is an AR project by The David Bowie Archive, Sony Music Entertainment (Japan) Inc. and Planeta. In this post, I jotted down some of my development as Lead Developer, under Director Nick Dangerfield, Art Direction Seth Tillett, and Tech Director Dan Brewster.

Paper Interaction

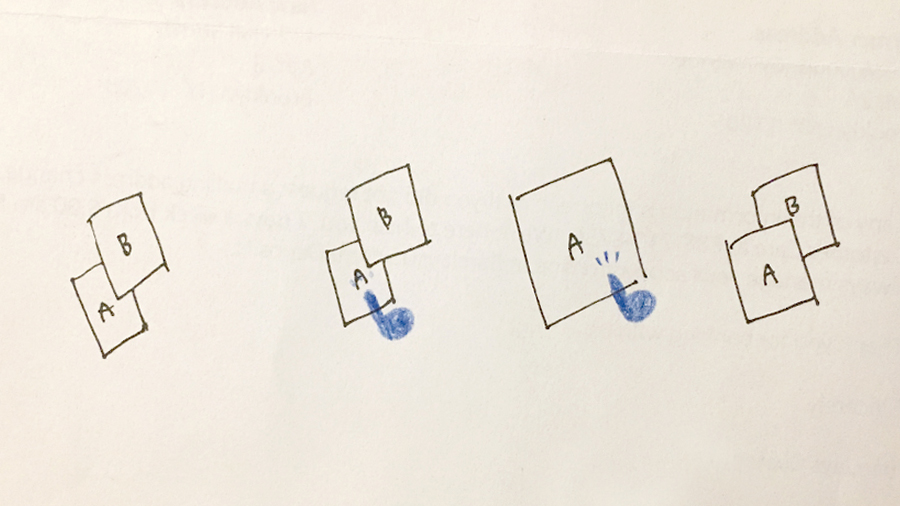

The irl exhibition has a lot of 2D materials, and for browsing them in a mobile app, there is a subtle balance we want to maintain, a balance between efficiency and AR-ness. We tried several ways, shuffle, fade in/out, but ultimately it feels the most natural to see them laying or leaning on some sort of surface.

And just like how you would expect and do in real life, we made the 2D materials move with your fingertip.

The effect especially works great on small paper cutout.

(Note – all videos in the post are direct screen recordings on my old iPhone6s)

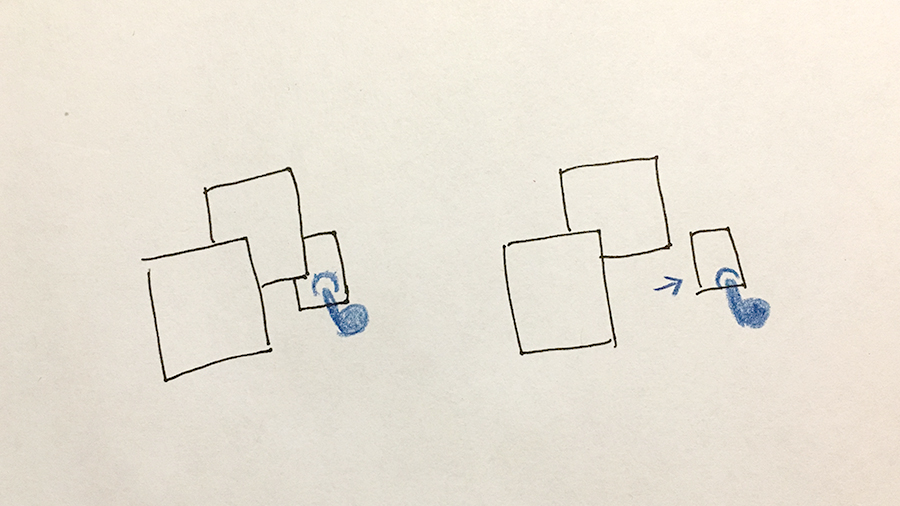

And as a tabletop AR, some 2D artifacts need to be stacked in order for all to fit in, thus we need to a way to shuffle them.

After testing, we found out that, bringing the “focus” artifact to the front of the stack after being sent back, feels the most natural and intuitive.

Paper stack shuffle.

Scene Changes Mask

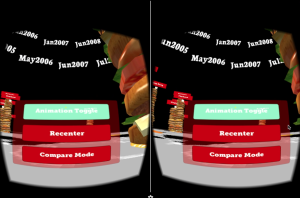

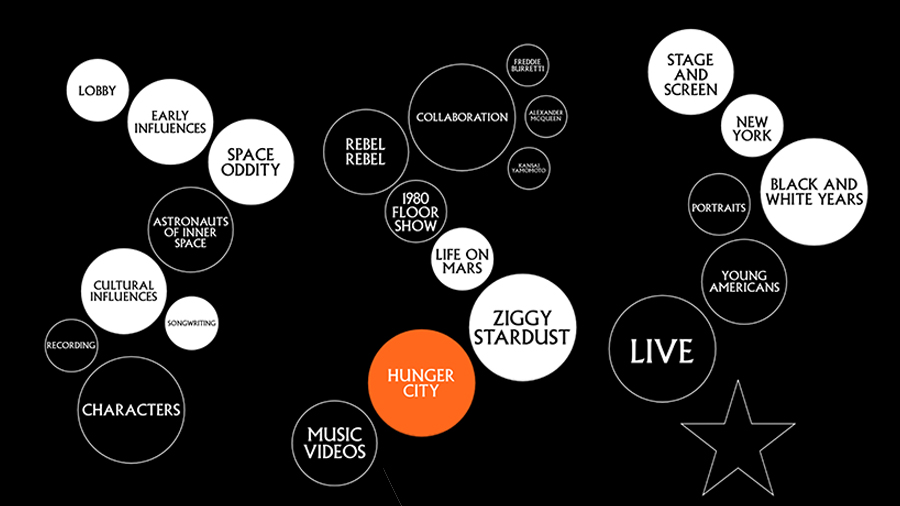

To pack the whole physical show into a tabletop AR mobile app, it is important to find a way to navigate the rich contents.

It includes over 400 high resolution captures of David Bowie’s costumes, sketches, handwritten lyrics, notes, music videos and original works of art are presented in striking arrangements and immersive settings, as well as dozens of never before seen items, including archival videos, drawings, photographs, and notes.

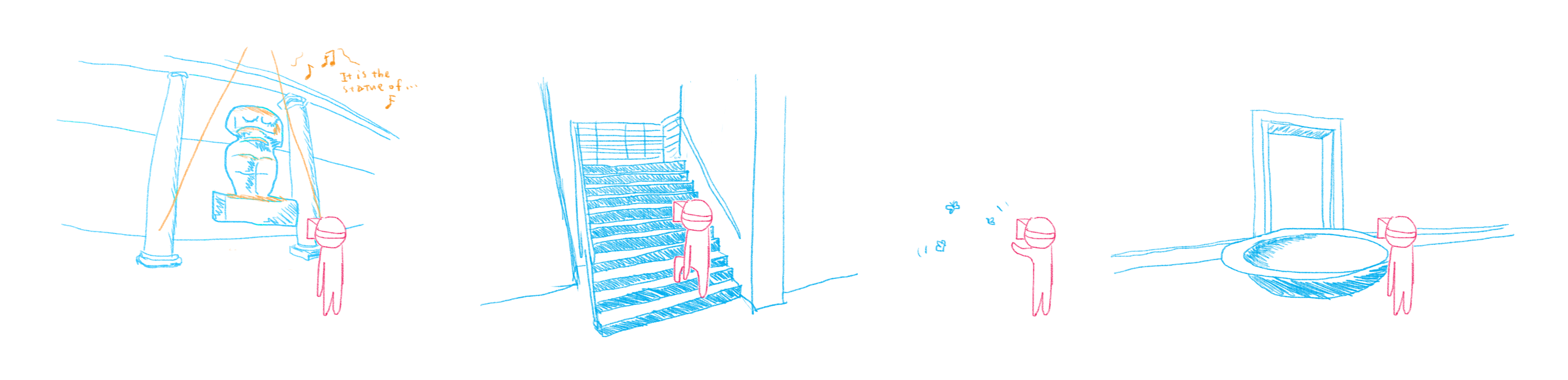

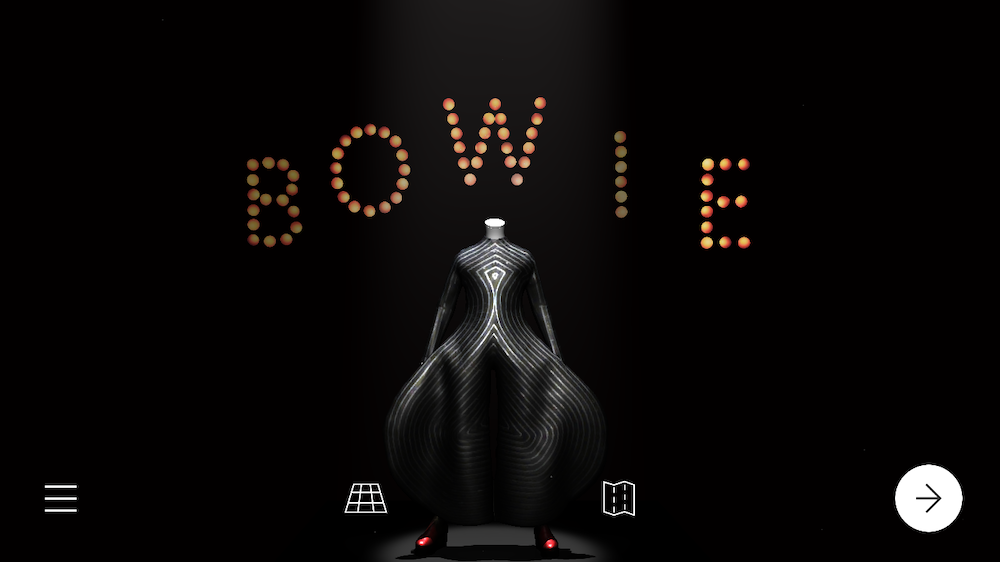

Within the show, we decided to condense each section into a digestible Diorama, and make them navigable through a map.

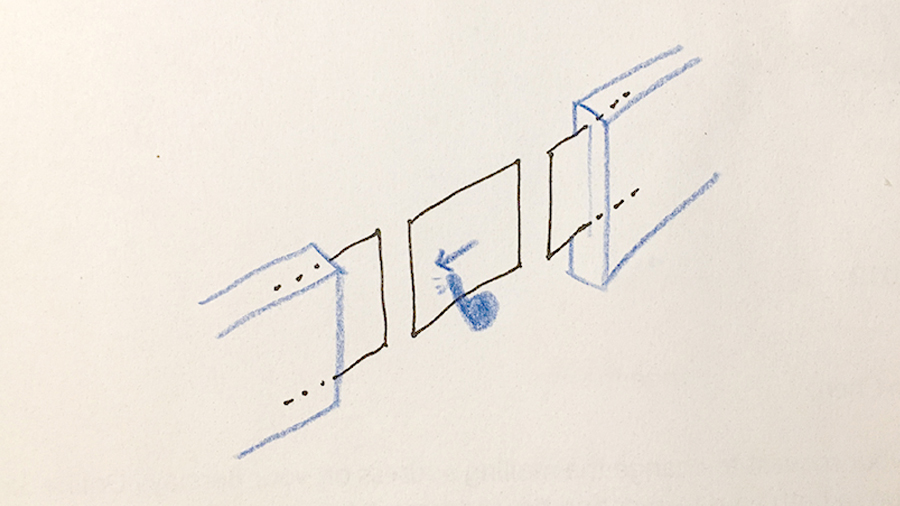

And within each diorama, we need to make some sub-scene changes as well to accommodate all the contents.

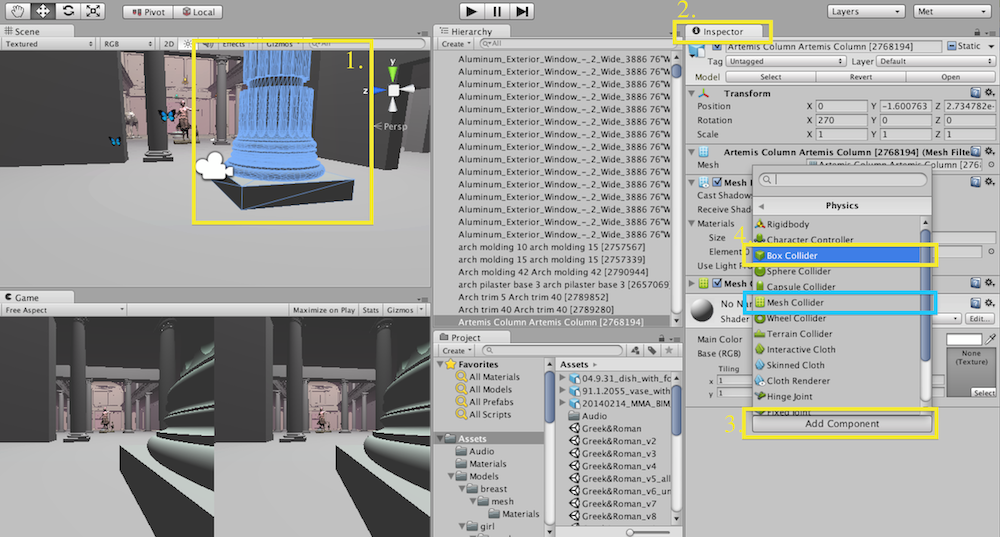

For this, I used a lot of invisible 3D mask.

The mask uses depth buffer to occlude and prevent the contents from being shown. It is a simple but effective graphic trick, and more can be found here.

For example in Hunger City, two masks are places on the sides of the stage, to hide the off-stage contents.

Scene Making

Under the guidance of Director and Art Director and with feedback from the whole development team, I build the AR scenes including: Early Influences, Space Oddity, Cultural Influences, Songwriting, Recording, Characters, Collaborations, Life On Mars, Ziggy Stardust, Hunger City, Stage and Screen, New York, and Black & White Years. Below are the in-app screen recording of some of those scenes.

Early Influences

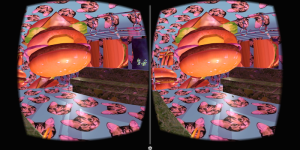

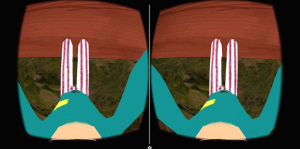

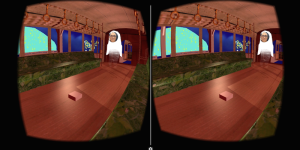

Hunger City

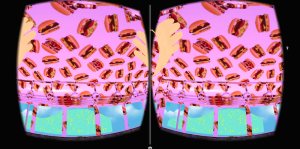

Black and White Years

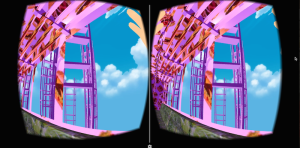

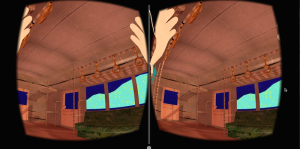

Stage and Screen

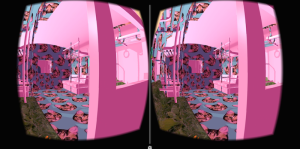

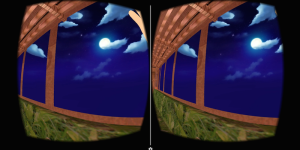

New York

Ziggy Stardust